1993 - Departure from the Deskside Server Computer.

After foundation of the Institute in 1992, the IT-Infrastructure was based primarily on thirty five IBM RS6000 workstations, which formed, what one would call a very loosely coupled cluster today. Provisional computer rooms were set-up as a prerequisite to venture into high performance parallel computing. In 1993 an early eight node IBM Power-1 SP-1 machine with a theoretical peak performance of 1 GFlop/s was delivered together with an IBM Model 990 File server and an IBM 3494 Tape Library, the latter two are shown on the photograph.

In service since 1993 - the IBM 3494 Tape Library.

It is really amazing that this robot is in service for more then 15 years now! It started with two 3490-C2A drives (800 MByte/cartridge) and two storage units in 1993. Today sixteen IBM E08/E09 drives and five storage units have been installed giving it a total capacity of more than ten Petabytes.

1994 to 2000 - a look back into provisional computer rooms hosting the first IBM SP-2 and attached disk storage.

It was clear that the IBM SP-1 had to be upgraded very quick. So in 1994 a 48 node IBM SP-2, which was enhanced to 77 nodes (including 8 x Power-2 SC) later on, had been installed. Nodes used Power-2 processors clocked at 66 MHz. Initially all processors came with 64 MByte memory. This system marked the first official entry into the List of the 500 most powerful computers systems of the world - the 48 node system went on rank 78 in November 1994. This system was decommissioned in 2000.

Disk storage based on IBM 2 GByte serial link disks and later 4.5 and 9.1 GByte SSA disks attached to the SP-2.

2000 - New computer building & intermediary SP-2

In 2000 the SP-2 as well as our facilities were indeed at their limits. A new subterranean building had to be constructed adjacent to the main building of the institute to host new computers. After a competitive biding process a new contract was signed between PIK and IBM to deliver a 200 x Power-3 based IBM SP-2 - placed at rank 121 on the June 2001 edition of the Top 500 list. This machine had been upgraded to a 240 x Power-4 based Cluster later on.

January 2003 - Delivery & Installation of the first Teraflop Computer at PIK.

The Power-4 based IBM Cluster with a total of 240 CPU was a big step - in terms of performance, weight and power uptake. On the Linpack Benchmark a performance of 575 GFlop/s (out of a theoretical peak performance of 1.056 GFlop/s) had been measured and the machine has been at rank 142 on the June 2003 edition of the List of the 500 most powerful computers systems of the world. This machine was operational until autumn 2008.

2005 - a Computer Center out of the Box.

After finishing the update of the cluster some work had to be done to improve other parts of the IT-Infrastructure. In spring 2005 a first blade center which hosts 14 dual processor servers (IBM PowerPC and Intel Xeon) had been installed at the institute to replace old Internet server hardware. The blade center has redundant power supplies, storage area and local area network switches integrated and it is connected to a fiber channel storage unit with a current capacity of 2 TB. IBM AIX, SuSE Linux Enterprise Edition, Microsoft Windows and VMWare ESX operating systems are used.

2006 - first Linux Cluster installed at the Institute.

At the last day of 2006 a new Linux cluster, funded by the German federal ministry for education and research, has been delivered to PIK to be used by scientists in the CLME1 project. This high performance 224 processor Linux cluster has been delivered and installed by IBM Germany in order to increase the computational capacity of the IBM Power4 based cluster already installed.

The compute nodes of the cluster are arranged into four blade centers with 14 blades each. Each of the blades is equipped with two dual core Intel Woodcrest CPU (3 Ghz, 1500 Mhz FSB) and 8 GByte memory. All nodes are diskless and boot Novell SLES10 Linux remotely. A high performance Voltaire Infiniband network has been installed for parallel scientific applications and the parallel file system. For the temporary storage of the output of numerical simulations a 70 TByte high performance parallel file system is directly attached to the compute blades via the Infiniband network.

1 - climate version of the local model used for the statistical analysis of extreme events.

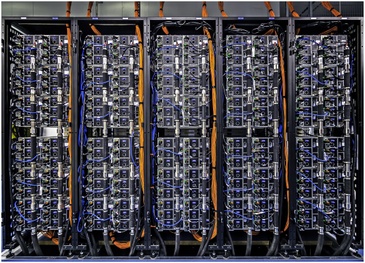

2008/2009 - IBM iDataPlex - 2.560 Intel cores and the biggest iron ever installed @ PIK.

Installation work on a new cluster started immediately after a bid invitation has been evaluated and a contract signed in autumn 2008. The new cluster with 2.560 Intel cores, SuSE Linux Enterprise Server operating system and a full service offering from IBM covering the years 2009 - 2012 ranked place 244 on the List of the worlds 500 most powerful computer systems in June 2009. The machine came in a new IBM iDataplex form factor which had been especially designed for high energy efficiency. In operation the system requires about 150 kW of electrical energy - including management, networking and 200 TByte of attached disk space. Two thirds of the generated heat are cooled directly via rear door heat exchangers.

2011 - One Petabyte of additional storage capacity installed for the HPC Cluster!

Capacity of the original parallel filesystems which had been installed together with the cluster at the end of 2008 was almost exhausted in spring 2011 and thus a secondary storage pool had to be added. This system - three fully equipped IBM DCS3700 storage servers - provide one Petabyte of additional raw capacity for scientific work. Volumes have been mapped as secondary storage pool to parallel filesystems without interrupting operations and a quarter of capacity had been used to set up a large cache for backups.

2015 - Inauguration of new HPC facilities and installation of new supercomputer

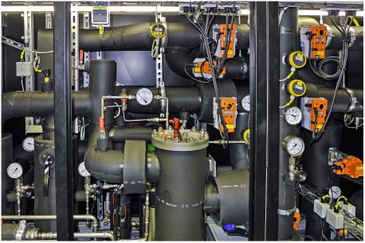

The inauguration of new high performance computer facilities in PIK building A56, which had been planned and build since 2010, and the subsequent installation of a new supercomputer in summer 2015 were mayor milestones in the history of high performance computing at the Institute. Facility and computer system have been designed such that from the perspective of energy efficiency they form a unit with the office spaces - energy of the directly water cooled supercomputer is used to heat all offices during the winter season.

Since 2015 the supercomputer received mayor updates in storage capacity, CPU and GPU resources. In 2024, this supercomputer was still heavily used - details about make an model are available here.

2022/23 - After a long wait, quite a jump!

We are pleased to announce that the EU-wide tender process for the replacement of the current high performance computing (HPC) system of the institute, which started almost a year ago with the qualification of bidders, has been successfully completed and a contract for the delivery of a new HPC system has been signed with pro-com Datensysteme GmbH in November 2022.

The new system will be built almost exclusively based on the very latest computer technologies, in particular the recently announced Lenovo Neptune direct watercooled hpc server systems, equipped with the latest generations of AMD "Genoa" CPUs and NVIDIA "Hopper" graphics processors; IBM Elastic Storage ESS systems and TS4500 Tape Libraries and last but not least "next data rate" NVIDIA Quantum-2 Infiniband network gear.

PIK is grateful to the Land Brandenburg and in particular the The Ministry of Science, Research and Culture for funding this important investment, which will enable the Institute to conduct its research at the highest level well into the year 2030.

2024 - Our new Lenovo (Compute) / IBM (Storage) HPC System passed acceptance tests!

Pictures courtesy of Bernhard Zepke, IBM (1), Lothar Lindenhan, PIK (2-7, 33-38), Bettina Saar, PIK (10-18), Roger Grzondziel, PIK (19-21), Gunnar Zierenberg, IBM (22-25), Karsten Kramer (26-32) and Lenovo / 2wMedia GmbH (39-41).